A lot more scientific studies than you think could be wrong

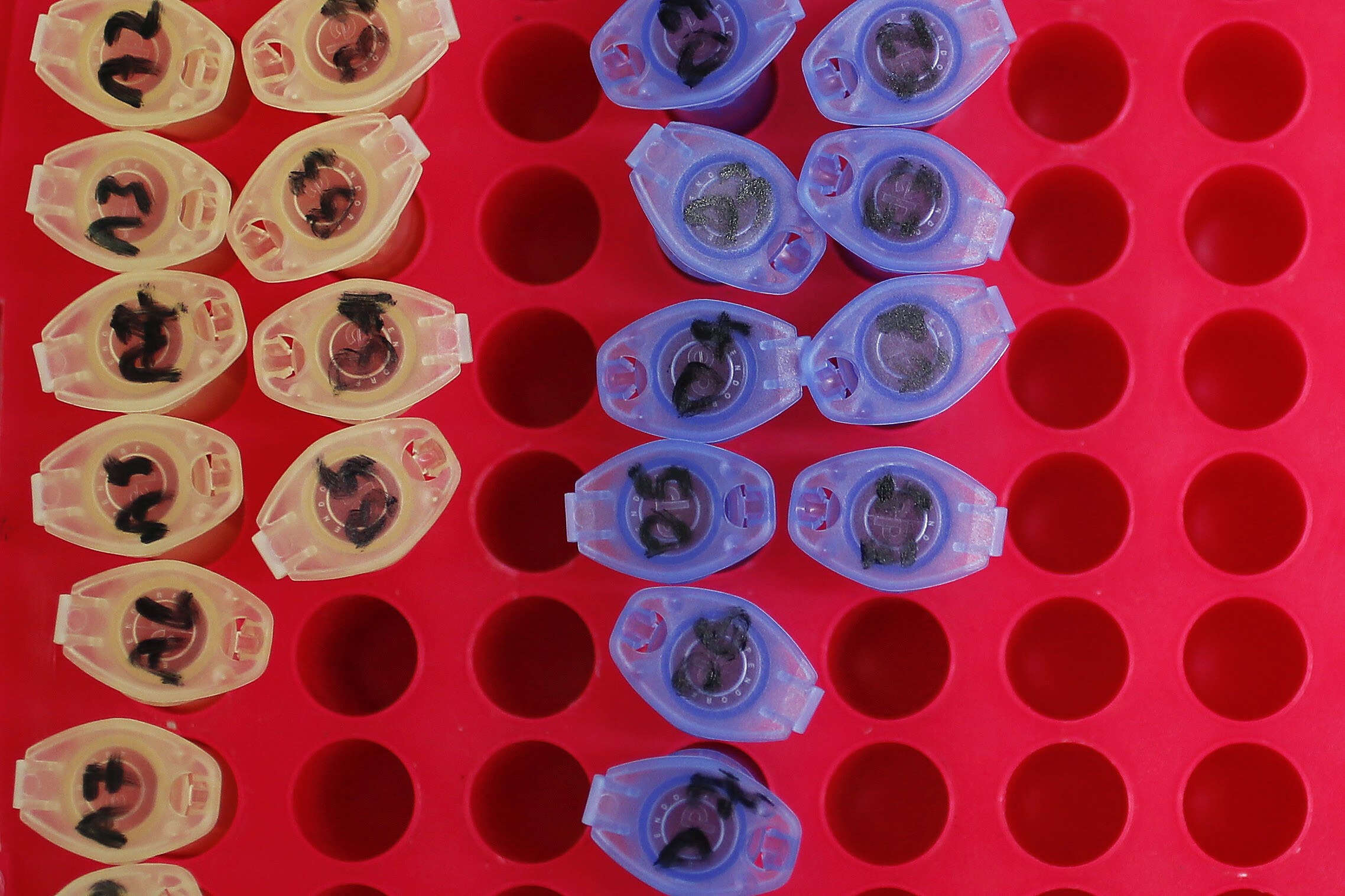

To draw conclusions from data, researchers usually rely on significance testing. Image: REUTERS/Damir Sagolj

There is a replicability crisis in science – unidentified “false positives” are pervading even our top research journals.

A false positive is a claim that an effect exists when in actuality it doesn’t. No one knows what proportion of published papers contain such incorrect or overstated results, but there are signs that the proportion is not small.

The epidemiologist John Ioannidis gave the best explanation for this phenomenon in a famous paper in 2005, provocatively titled “Why most published research results are false”. One of the reasons Ioannidis gave for so many false results has come to be called “phacking”, which arises from the pressure researchers feel to achieve statistical significance.

What is statistical significance?

To draw conclusions from data, researchers usually rely on significance testing. In simple terms, this means calculating the “p value”, which is the probability of results like ours if there really is no effect. If the p value is sufficiently small, the result is declared to be statistically significant.

Traditionally, a p value of less than .05 is the criterion for significance. If you report a p<.05, readers are likely to believe you have found a real effect. Perhaps, however, there is actually no effect and you have reported a false positive.

Many journals will only publish studies that can report one or more statistically significant effects. Graduate students quickly learn that achieving the mythical p<.05 is the key to progress, obtaining a PhD and the ultimate goal of achieving publication in a good journal.

This pressure to achieve p<.05 leads to researchers cutting corners, knowingly or unknowingly, for example by p hacking.

The lure ofp hacking

To illustrate p hacking, here is a hypothetical example.

Bruce has recently completed a PhD and has landed a prestigious grant to join one of the top research teams in his field. His first experiment doesn’t work out well, but Bruce quickly refines the procedures and runs a second study. This looks more promising, but still doesn’t give a p value of less than .05.

Convinced that he is onto something, Bruce gathers more data. He decides to drop a few of the results, which looked clearly way off.

He then notices that one of his measures gives a clearer picture, so he focuses on that. A few more tweaks and Bruce finally identifies a slightly surprising but really interesting effect that achieves p<.05. He carefully writes up his study and submits it to a good journal, which accepts his report for publication.

Bruce tried so hard to find the effect that he knew was lurking somewhere. He was also feeling the pressure to hit p<.05 so he could declare statistical significance, publish his finding and taste sweet success.

There is only one catch: there was actually no effect. Despite the statistically significant result, Bruce has published a false positive.

Bruce felt he was using his scientific insight to reveal the lurking effect as he took various steps after starting his study:

He collected further data.

He dropped some data that seemed aberrant.

He dropped some of his measures and focused on the most promising.

He analysed the data a little differently and made a few further tweaks.

The trouble is that all these choices were made after seeing the data. Bruce may, unconsciously, have been cherrypicking – selecting and tweaking until he obtained the elusive p<.05. Even when there is no effect, such selecting and tweaking might easily find something in the data for which p<.05.

Statisticians have a saying: if you torture the data enough, they will confess. Choices and tweaks made after seeing the data are questionable research practices. Using these, deliberately or not, to achieve the right statistical result is p hacking, which is one important reason that published, statistically significant results may be false positives.

What proportion of published results are wrong?

This is a good question, and a fiendishly tricky one. No one knows the answer, which is likely to be different in different research fields.

A large and impressive effort to answer the question for social and cognitive psychology was published in 2015. Led by Brian Nosek and his colleagues at the Center for Open Science, the Replicability Project: Psychology (RP:P) had 100 research groups around the world each carry out a careful replication of one of 100 published results. Overall, roughly 40 replicated fairly well, whereas in around 60 cases the replication studies obtained smaller or much smaller effects.

The 100 RP:P replication studies reported effects that were, on average, just half the size of the effects reported by the original studies. The carefully conducted replications are probably giving more accurate estimates than the possibly p hacked original studies, so we could conclude that the original studies overestimated true effects by, on average, a factor of two. That’s alarming!

How to avoidp hacking

The best way to avoid p hacking is to avoid making any selection or tweaks after seeing the data. In other words, avoid questionable research practices. In most cases, the best way to do this is to use preregistration.

Preregistration requires that you prepare in advance a detailed research plan, including the statistical analysis to be applied to the data. Then you preregister the plan, with date stamp, at the Open Science Framework or some other online registry.

Then carry out the study, analyse the data in accordance with the plan, and report the results, whatever they are. Readers can check the preregistered plan and thus be confident that the analysis was specified in advance, and not p hacked. Preregistration is a challenging new idea for many researchers, but likely to be the way of the future.

Estimation rather than p values

The temptation to p hack is one of the big disadvantages of relying on p values. Another is that the p<.05 criterion encourages black-and-white thinking: an effect is either statistically significant or it isn’t, which sounds rather like saying an effect exists or it doesn’t.

But the world is not black and white. To recognise the numerous shades of grey it’s much better to use estimation rather than p values. The aim with estimation is to estimate the size of an effect – which may be small or large, zero, or even negative. In terms of estimation, a false positive result is an estimate that’s larger or much larger than the true value of an effect.

Let’s take a hypothetical study on the impact of therapy. The study might, for example, estimate that therapy gives, on average, a 7-point decrease in anxiety. Suppose we calculate from our data a confidence interval – a range of uncertainty either side of our best estimate – of [4, 10]. This tells us that our estimate of 7 is, most likely, within about 3 points on the anxiety scale of the true effect – the true average amount of benefit of the therapy.

In other words, the confidence interval indicates how precise our estimate is. Knowing such an estimate and its confidence interval is much more informative than any p value.

I refer to estimation as one of the “new statistics”. The techniques themselves are not new, but using them as the main way to draw conclusions from data would for many researchers be new, and a big step forward. It would also help avoid the distortions caused by p hacking.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Stay up to date:

Chemical and Advanced Materials

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.