Inside the battle to counteract the COVID-19 'infodemic'

Media and tech organizations are rapidly trying to curb the spread of COVID-19 myths online. Image: REUTERS/Daniel Acker

- The 'infodemic' of false information about COVID-19 can have dangerous consequences.

- Here's how media and tech companies are responding to share accurate information and fact-check claims.

- Challenges remain to ensure and value accurate content and media.

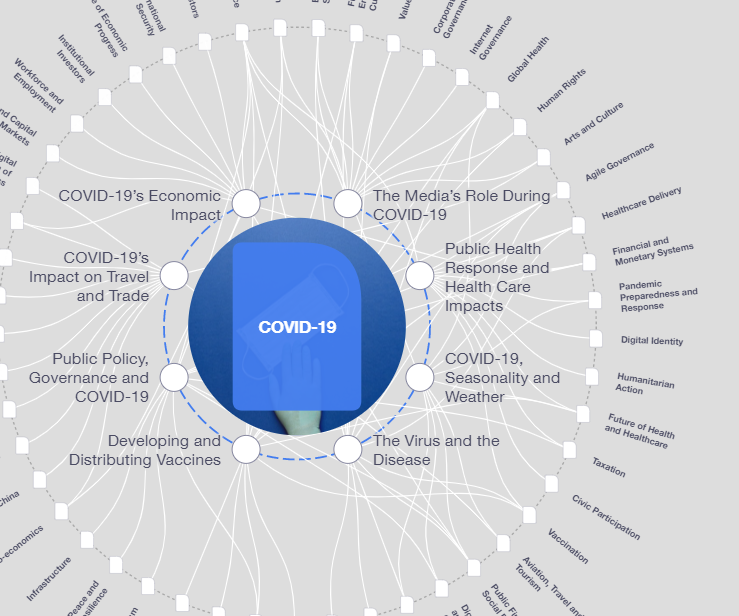

In the global response to fight the COVID-19 pandemic, one thing is becoming increasingly clear: accurate information can play an important role in public safety. Inaccurate, unsubstantiated, and fabricated content proliferating online - creating a parallel "infodemic" - can have particularly dire consequences during this public health emergency, raising the urgency for meaningful efforts to counteract these claims.

What is the World Economic Forum doing about the coronavirus outbreak?

The dangers of misinformation

The types of false information on COVID-19 vary in their theme, scope and reach. Among the most dangerous content is inaccurate health advice on how to “prevent” or “cure” the infection, such as taking a specific drug. For example, a 5-year old boy in Iran who is now blind after his parents gave him toxic methanol in the unfounded hopes that this would protect him from COVID-19. In Nigeria, three people overdosed on chloroquine after hearing claims it could help treat coronavirus. Such inaccurate claims represent a clear and direct danger not only to the individual but also to public health.

Aside from health inaccuracies, there are also politically and racially charged unscientific claims about COVID-19. These stories, which falsely claim that people of specific origin are more susceptible to spread COVID-19, have led to several cases of hate-speech, discrimination and even physical attacks.

How tech companies are responding

Recognizing the risks and gravity of the situation, major social networks and tech companies issued a joint statement announcing a coordinated response to combat “fraud and misinformation about the virus.”

On a recent World Economic Forum COVID Action Platform call with business and government leaders, Ruth Porat, the Chief Financial Officer and Senior Vice President of Alphabet and Google, highlighted that “Every company will have a different core strength that it can leverage to make a difference. I firmly believe the key point is that each company mobilizes and contributes where it can. Collectively we can make a difference.”

While social networks have grappled with curbing the spread of harmful content on their platforms before the COVID-19 outbreak, there is now a greater emphasis and urgency on removing or blocking inaccurate content rather than just flagging or downgrading it in the news feed.

Facebook recently started removing posts claiming that physical distancing doesn’t help prevent the spread of the coronavirus. Facebook also recently removed a post by Brazilian President Jair Bolsonaro that claimed that the drug “hydroxychloroquine is working in all places.” Google took similar action when it banned the Infowars app on the Android store after founder Alex Jones disputed the need to quarantine to prevent the spread of COVID-19.

There is also now close cooperation between many social networks and teams of fact-checking experts from recognized global and national health authorities to identify which claims are verifiably false and therefore warrant removal. “It’s critical that people get accurate information from public health experts and organizations right now,” says Nick Clegg, VP of Global Affairs and Communications at Facebook.

Since the outbreak of COVID-19, there has been a greater emphasis on providing search and social users with credible information from trusted sources through alert banners and information hubs. “Since the early days of this public health emergency, we’ve been working closely with the World Health Organization and other national health authorities to make sure people get accurate information, and taking aggressive steps to stop misinformation and harmful content from spreading," Clegg says.

Facebook, YouTube and other content-sharing platforms have also provided the WHO and several NGOs with advertising credits so that they can quickly and freely launch campaigns to reach users with quality information.

The challenge of messaging apps

Messaging apps are another arena in the battle against the tide of COVID-19 misinformation. Given the privacy-focused nature of these products, operators have taken a different approach than in social spheres of platforms such as Instagram or Facebook. Knowing that COVID-19 misinformation is spread usually by means of chain messages, both Facebook’s messaging platforms, WhatsApp and Messenger, are now flagging messages that have been sent multiple times.

WhatsApp has limited the number of times a message may be forwarded. It has also introduced the WHO Health Alert Service notifying its users about the latest COVID-19 developments and launched chatbots in several jurisdictions to allow people to contact their national health authorities with inquiries about COVID-19.

What is the World Economic Forum doing to measure the value in media?

Strict rules for ads and limited monetization

Tech companies have also taken steps to stop those who are looking to exploit the public for profits during this crisis. Almost all search engines and social networks initially prohibited advertising products and services mentioning the coronavirus infection. Google, however, is now allowing advertisers working directly with issues related to the pandemic such as government entities, hospitals, medical workers and NGOs to mention COVID-19 given its “an ongoing and important part of everyday conversation” according to Google’s Marc Beatty.

Nevertheless, most online platforms are no longer running ads for face masks, sanitizers and other protective equipment. Labelling the current pandemics a “sensitive event,” YouTube has conditioned monetization of all video content related to COVID-19 by strict compliance with the requirements of factuality and sensitivity. Initiatives such as the Global Alliance for Responsible Media are bringing together the entire media ecosystem to demonetize harmful content, beyond COVID-19.

Many regulatory and governmental bodies, including in the FTC and FDA, EU, and Health Canada are cracking down on advertisers and sellers of fraudulent COVID-19 products, but the sheer volume and breadth of claims is still a major challenge.

Are these measures working?

An Oxford study analyzing a sample of 225 pieces of misinformation from January to March found that 59% of posts rated as false or misleading by independent fact-checkers remained on Twitter, 27% on YouTube, and 24% on Facebook.

In a recent Washington Post article, many platforms highlighted that enforcement of some recent policy changes may not have been reflected fully in the data set which was from a period when policies were partly being shaped or adjusted. Enforcement of policies for many platforms have grown more aggressive over time, according to executives, as guidance from health bodies have been rapidly changing.

An interesting finding of the study was the significant role that top-down misinformation plays with politicians, celebrities, and other prominent public figures making up 69% of total social media engagement, which is a key measure of reach on social platforms.

People crave accurate news content

Many news outlets have also been ramping up their efforts to bust coronavirus myths. For example, the BBC published a myth-busting article rounding up key misconceptions, the CBC explained why you can't make an N95 mask out of a bra and The New York Times warned that drinking industrial alcohol will not protect against the coronavirus.

Yet while there has been a significant increase in news subscriptions tied to COVID-19 from customers looking to stay informed, news organizations are also experiencing staggering declines in advertising revenue as entire industries pull back their marketing spend. Many brands worry about negatively impacting the perception of their brand by being associated with coronavirus coverage – a claim that is counter to current research. Other companies that are running ads are blocking them from showing up alongside coronavirus coverage, with “coronavirus” now the most common word on block lists for news publishers.

These challenges highlight the need for a balance between open access to information and strategies to bring in revenue, according to analysis by Piano, a company that helps media companies build audiences and increase revenue. Finding viable business models to make sure publishers are compensated for the value provided to consumers is a key industry imperative in stopping the proliferation of harmful content.

If and how these tactics can be applied to other content areas once this crisis is over remains to be seen. Support for media in this fight against bad actors and misinformed users, in addition to global cooperation between the public and private sector, will be central to success in the future.

Farah Lalani is the project lead and Juraj Majcin is an intern for Advancing Global Digital Content Safety at the World Economic Forum, which is exploring the future of content moderation.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Stay up to date:

COVID-19

Related topics:

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.

More on Health and Healthcare SystemsSee all

Mansoor Al Mansoori and Noura Al Ghaithi

November 14, 2025