9 ethical AI principles for organizations to follow

Stay up to date:

Artificial Intelligence

- As organizations increasingly apply artificial intelligence (AI), they must consider ethical and moral concerns as a strategic priority.

- A PwC analysis proposes 9 ethical principles for organizations to track.

- Stakeholders need to work together to identify threats and co-create AI that will be beneficial and ethical for all parties.

Organizations around the globe are becoming more aware of the risks artificial intelligence (AI) may pose, including bias and potential job loss due to automation. At the same time, AI is providing many tangible benefits for organizations and society.

For organization, this is creating a fine line between the potential harm AI might cause and the costs of not adopting the technology. Many of the risks associated with AI have ethical implications, but clear guidance can provide individuals and organizations with recommended ethical practices and actions

Three emerging practices can help organizations navigate the complex world of moral dilemmas created by autonomous and intelligent systems.

1. Introducing ethical AI principles

AI risks continue to grow, but so does the number of public and private organizations that are releasing ethical principles to guide the development and use of AI. In fact, many consider this approach as the most efficient proactive risk mitigation strategy. Establishing ethical principles can help organizations protect individual rights and freedoms while also augmenting wellbeing and the common good. Organizations can these principles and translate them into norms and practices, which can then be governed.

An increasing number of public and private organizations, ranging from tech companies to religious institutions, have released ethical principles to guide the development and use of AI, with some even calling for expanding laws derived from science fiction. As of May 2019, 42 countries had adopted the first set of Organization for Economic Co-operation and Development’s (OECD’s) ethical AI principles, with more likely to follow suit.

The landscape of ethical AI principles is vast, but there are some commonalities. Across more than 90 sets of ethical principles, which contain over 200 principles, we have consolidated them into nine core ethical AI principles. Tracking the principles by company, type of organization, sector and geography enables us to visualize and capture the concerns around AI that are reflected, and how they vary across these groups. These can be translated and contextualized into norms and practices, which can then be governed.

These core ethical AI principles are derived from globally recognized fundamental human rights, international declarations and conventions or treaties — as well as a survey of existing codes of conduct and ethical principles from various organizations, companies and initiatives.

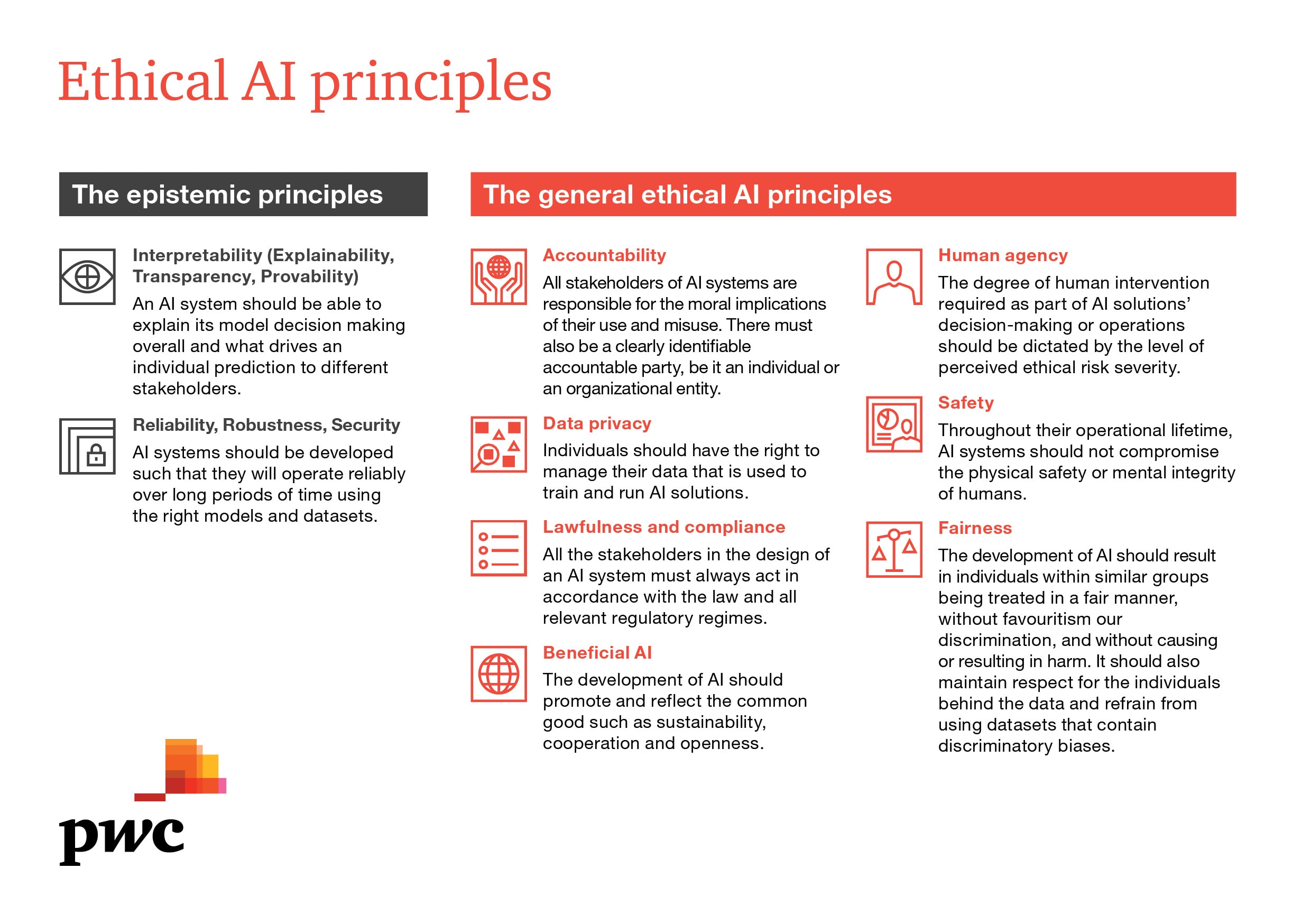

The nine core principles can be distilled into epistemic and general principles and can provide a baseline for assessing and measuring the ethical validity of an AI system. The landscape of these principles is meant to be used to compare and contrast the AI practices currently adopted by organizations, and they can then be embedded to help develop ethically aligned AI solutions and culture.

Epistemic principles constitute the prerequisites for an investigation of AI ethicality and represent the conditions of knowledge that enable organizations to determine whether an AI system is consistent with an ethical principle. They include principles on interpretability and reliability.

General ethical AI principles, meanwhile represent behavioral principles that are valid in many cultural and geographical applications and suggest how AI solutions should behave when faced with moral decisions or dilemmas in a specific field of usage. They include principles on accountability, data privacy and human agency.

Different industries and types of organizations, including government agencies (like the US Department of Defense), private-sector firms, academia, think tanks, associations and consortiums, gravitate toward different principles. While all organizations prioritize fairness, safety is more common in industries involved with physical assets as opposed to information assets. Lawfulness and compliance, however, most frequently appear in principles released by governmental agencies, associations and consortiums, and while all organizations must follow the law, few recognize law as an ethical principle.

2. Contextualizing ethical AI principles

Cultural difference play a crucial role in how those principles should be interpreted. Therefore, the principles should first be contextualized to reflect the local values, social norms and behaviors of the community in which the AI solutions operate.

These "local behavioral drivers" fall into two categories: compliance ethics, which relates to the laws and regulations applicable in a certain jurisdiction, and beyond compliance ethics, which relates to social and cultural norms. During the contextualization process, investigators must seek to identify stakeholders, their values and any tensions and conflicts that might arise for them through the use of that technology.

Why is contextualization important? Let’s consider fairness. There has been much discussion about the many ways fairness can be measured, with respect to an individual, a given decision and a given context. Simply stating that systems need to be "fair" does not provide instructions on the who, what, where and how that fairness should be implemented, and different regulators have varying views on fairness. Contextualization would require key stakeholders to define what fairness means for them.

For example, equal opportunity in the hiring space can be defined differently. In the US, the Equal Employment Opportunity Commission (EEOC) requires equal opportunity with respect to a selection rate (a specific metric that calculates the ratio of applicants selected for a job) with a tolerance defined by the "Four-Fifth’s rule". The UK anti-discrimination law, the UK Equality Act 2010, offers protection against discrimination — both direct and indirect, generated by a human or automated decision-making system.

3. Linking ethical AI principles to human rights and organizational values

Tying ethical principles to specific human rights is paramount to limit regulatory ambiguity. Furthermore, instilling human rights ideas as a foundation of AI practices helps to establish moral and legal accountability, as well as the development of human-centric AI for the common good. This approach aligns with the European Commission’s ethics guidelines for trustworthy AI.

It’s also necessary to align them with organizational values, existing business ethics practices and business objectives in order to explicitly translate relevant ideas into specific norms that influence concrete design and governance to shape the development and use of AI systems. Organizations should create AI ethics frameworks that are actionable, with clear accountability and concrete methods of monitoring.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Related topics:

Forum Stories newsletter

Bringing you weekly curated insights and analysis on the global issues that matter.

More on Emerging TechnologiesSee all

Adam Skali and Clementina Colombo

May 5, 2025

Mette Asmussen and Pauline Van Ostaeyen

May 2, 2025

Shuvasish Sharma and Ximena Játiva

May 1, 2025

Don McLean

May 1, 2025

Till Leopold

April 30, 2025